Their mouth moves in time with their voice. Their eyes lock with yours and follow you as they speak. Dimples form at the side of their mouth as they flash you a slight smile.

You can’t be sure you’re not looking at a disguise.

There was a time when you had no reason to doubt that a person on the other end of a video call was who they appeared to be. It really wasn’t long ago. But face-swapping, a rapidly developing application of AI technology, has thrown this measure of trust into question.

Observers have warned that, as once crude facial replicas become more difficult to identify as fake, the opportunity grows for criminals who wish to put them to use in fraudulent schemes.

David Maimon, head of fraud insights at SentiLink and a professor at Georgia State University, follows the movements of fraud gangs on social media and messaging platforms such as Telegram. He watched as scammers keenly adopted face-swapping technology and spread the word about how to use it.

“Scammers often operate in groups, which allows them to share knowledge about new technologies, exchange advice on improving their tactics, and even trade or switch victims’ details,” says David. “Part of their communication involves bragging about their fraudulent activities, often sharing videos of live scams to showcase their skills and gain credibility within their circles.”

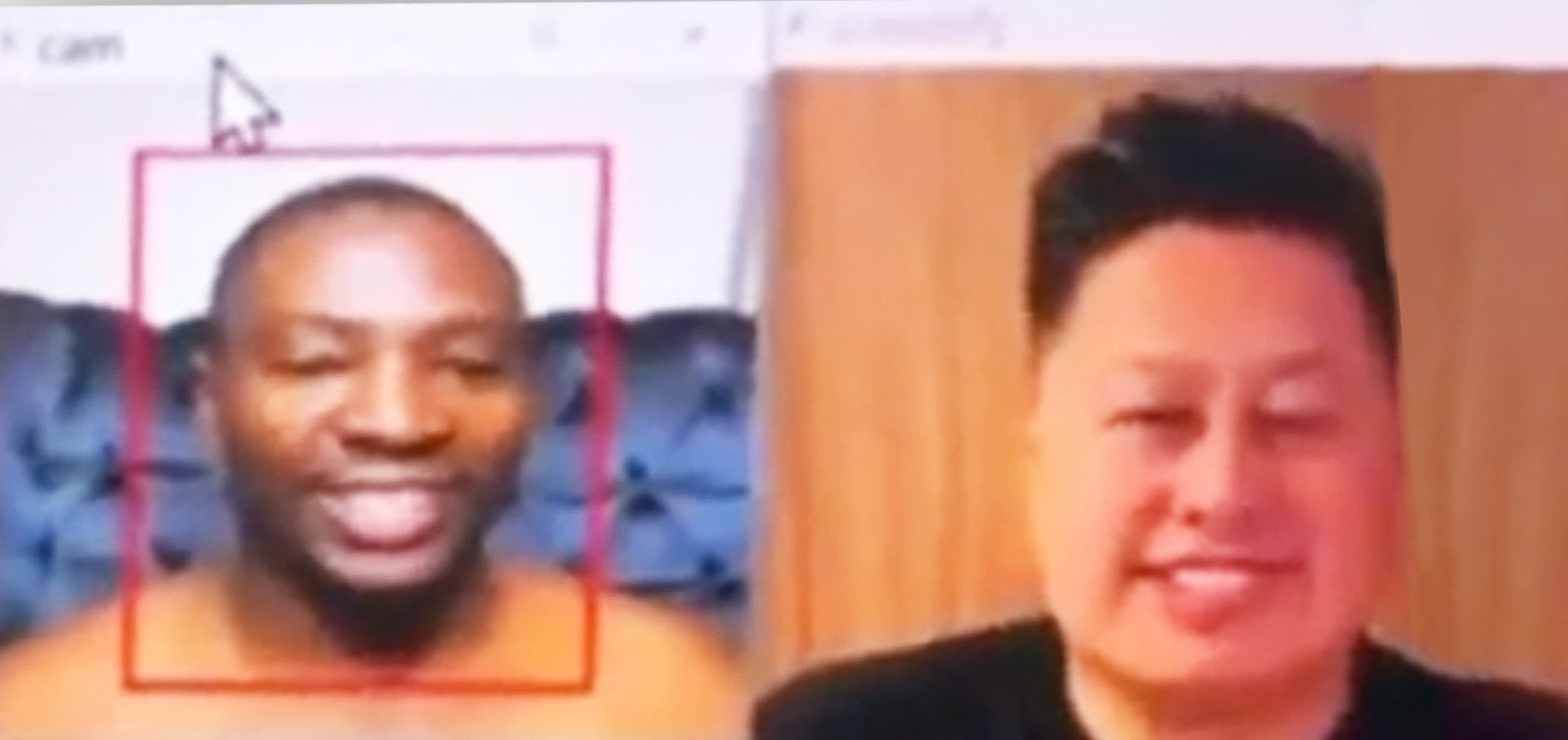

A video David pulled from one of these groups shows a man demonstrating his use of live face-swapping technology to apparently charm a potential victim in what is known as a romance scam. In the clip, a man can be seen flirting with a woman. His true face is shown beside a completely unrecognisable altered face which instantly mirrors his movements. The woman, who can only see the altered face, appears to engage happily in conversation and shows no sign of suspecting that she is speaking to a man wearing an incredibly advanced mask.

Have you been the target of a high-tech scam? Get in touch at liam.james@independent.co.uk

“Live face-swapping technology and its online transmission have improved dramatically over the past year and a half,” says David. “A few years ago, face-swapping was possible, but streaming the altered face in real-time during a live conversation posed significant challenges. Today, numerous software can seamlessly support live face-swapping.”

A romance scam is a trap which lures lonely hearts. A scammer will draw a victim in with feigned affection and the promise of love. Then they will steal their money. Sometimes they will cut off contact after this, though they may maintain a relationship for months or even years.

One recent high-profile example saw a woman pay more £700,000 to a scammer who was using deepfake photos to pretend to be Brad Pitt. The scammer convinced the woman, who was going through a divorce, that the Hollywood star had fallen on hard times and was in desperate need of money to cover his medical expenses.

It can be hard to believe that anyone would be fooled by such a ploy. But, the tragic fact is, there was a person who had the will and the means of selling that scenario to an unsuspecting victim – and their means are quickly becoming more advanced.

The capacity for face-swapping to deceive is bolstered by voice-changing technology. Any major dating app offers safety advice to users which invariably suggests that a phone or video call can be used to screen potential dates. But they don’t warn that either of these lines of communication could be used to hide a caller’s identity.

David says: “The combination of face-swapping and voice-cloning technologies simplifies online fraud for perpetrators and makes it much more challenging for targets to detect. We are seeing two types of voice changing operations. First, the use of voice changing devices. Those are simple devices you can buy on amazon for less than $40. The second is the use of AI to both create voices and cloning them.”

The latest research suggests that voice deepfake technology is now widespread in use. In the UK in the past year, 26 per cent of consumers received a deepfake voice call, according to polling by Hiya, a call protection firm. The sums lost to these calls are remarkable. An average of £13,342 was reported lost – more than 10 times the average for scams overall.

The technology is not limited to romance fraud. It has been used in investment fraud and a range of online scams targeting individuals as well as companies.

“We are seeing them use this technology to open new bank accounts, impersonate CEOs to deceive employees into sending money to fake vendors, and carry out extortion scams,” says David.

A 2024 survey by Regula Forensics, found that 49 per cent of companies had been targeted by both audio and video deepfakes, up from 37 per cent and 29 per cent respectively in 2022. Last summer, Ferrari was the subject of an attempted scam which saw a fraudster accurately mimic the southern Italian accent of CEO Benedetto Vigna.

This scam failed as the Ferrari employee who took the call, made suspicious by talk of an urgently needed transaction, asked a question about a recent book they had lent their boss. Months earlier, a scammer had better luck with a Hong Kong-based employee at British engineering company Arup, who was fooled by a face-swapping video call into transferring £20m to criminals posing as their superiors.

The money taken in such scams is mostly drawn into an international criminal enterprise, with gangs based primarily in west Africa and southeast Asia taking money from a country like Britain and transferring it into an organised crime network to fund other illicit activity.

Simon Miller, director of policy, strategy and communications at Cifas, Britain’s leading fraud prevention service, says: “Fraud and scams are inherently linked to really serious organised criminal gangs. And the whole purpose of the activity is to get money out of the UK and into other jurisdictions to fund other forms of criminal activity, including everything from people smuggling to drug smuggling to terrorism.“

“It’s estimated that 72 to 73 per cent of all scams involve an actor outside of the UK.”

Earlier this month investigators in Nigeria said they had uncovered evidence linking the country’s notorious “Yahoo Boy” scammers to kidnapping, ritual killings and arms trafficking. The “Yahoo Boys” take their name from the email service they used in the early days of their online scamming.

It’s a name rendered quaint by technological development but this speaks to the enduring challenge that scams pose: they change with the times.

And the times are changing quickly.