Mr. Deepfakes, a site that provides users with nonconsensual, AI-generated deepfake pornography, has shut down.

The site, founded in 2018, is described as the “most prominent and mainstream marketplace” for deepfake porn of celebrities and individuals with no public presence, CBS News reports. Deepfake pornography refers to digitally altered images and videos in which a person’s face is pasted onto another’s body using artificial intelligence.

Now, the site has notified users it has shut down for good.

“A critical service provider has terminated service permanently. Data loss has made it impossible to continue operation,” a notice at the top of the site said, according to 404 Media.

“We will not be relaunching. Any website claiming this is fake,” the notice continued. “This domain will eventually expire and we are not responsible for future use. This message will be removed around one week.”

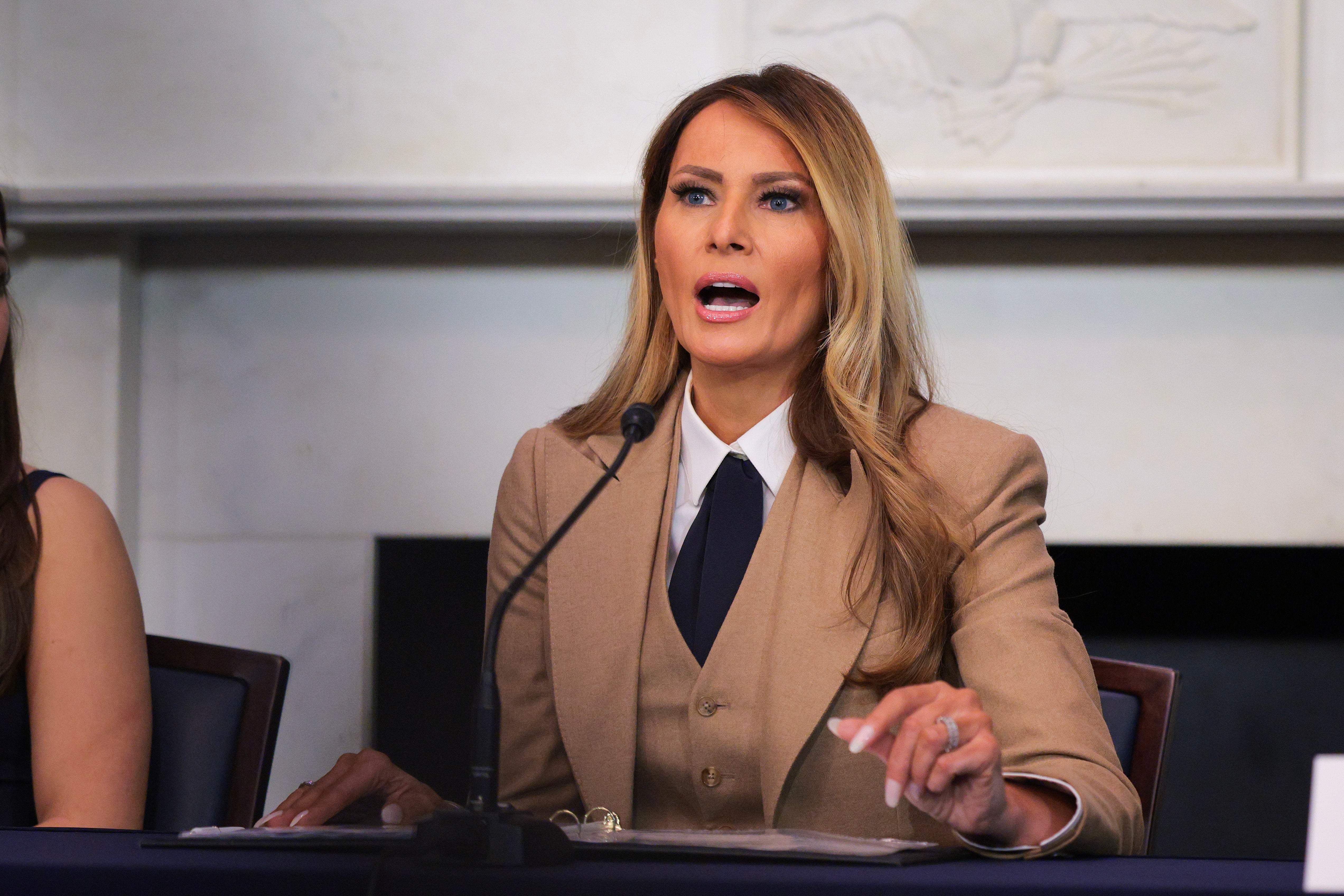

The move comes days after Congress passed the “Take it Down Act,” a bill championed by First Lady Melania Trump. The bill, now set for President Donald Trump’s signature, makes it a federal crime to knowingly publish nonconsensual sexual images, including AI-generated deepfakes. All sites must remove the content within 48 hours of notice from a victim.

While many states already had laws banning deepfakes and revenge porn, this marks a rare example of federal intervention on the issue.

Henry Ajder, an expert on AI and deepfakes, told CBS News the site was a “central node” for nonconsensual deepfake porn.

“I’m sure those communities will find a home somewhere else but it won’t be this home and I don’t think it’ll be as big and as prominent. And I think that’s critical,” Ajder said.

“We’re starting to see people taking it more seriously and we’re starting to see the kind of societal infrastructure needed to react better than we have, but we can never be complacent with how much resource and how much vigilance we need to give,” he added.

Republican Senator Ted Cruz and Democratic Senator Amy Klobuchar first introduced the bill. Cruz said he was inspired by the story of Elliston Berry, who was the target of deepfake porn shared on Snapchat when she was 14.

However, some critics have spoken out against the bill, calling it too broad and arguing it could lead to the censorship of government critics, LGBTQ+ content and legal pornography, the Associated Press reports.