The Department for Work and Pensions (DWP) has revealed how it is using AI to inform decisions on whether to approve or deny certain benefit applications.

Sharing new details to the official government algorithm database, the department says it is using an AI tool called ‘online medical matching’ to help agents make decisions on applications for Employment Support Allowance (ESA).

Claimed by 1.5 million people, this is the main health-related benefit that people can receive if they have a condition that affects how much they are able to work. The DWP says the tool is used to decide if someone is eligible for the benefit, with an agent overseeing.

It works by comparing the health condition(s) a claimant enters when applying for the benefit with a centrally maintained list and finding the ‘closest match.’ It reports a 87 per cent correct prediction rate for this process.

The result is then used to register the claim on the ESA benefit system using an ‘automated registration solution.’ At this point, a human agent will perform a case review, making the final decision on the claim and whether ESA should be awarded.

Despite being in use since July 2020, the DWP shared details of the tool for the first time on Monday. The department says the tool has processed over 780,000 cases since its implementation, during which time it has saved 42,500 operational hours.

However, its development was not without hiccups. Developers explain that the first version of the tool used from 2020 to 2024 only achieved a 35 per cent correct match rate, leaving 65 per cent to be corrected by an agent. This was due to the original algorithm attempting to match spellings, rather than context.

Concerns have also been raised about the implications of automation in benefit decision making. Shelley Hopkinson, head of policy and influencing at Turn2us, says: “With many struggling to contact the DWP and facing long waits for decisions, using automation to speed up processes could be positive – if done right.

“Past issues with AI-driven decision-making show how automation can introduce bias and unfairly penalise people who need support. It’s crucial that people understand how these systems work and have clear routes to challenge mistakes. The government must ensure that AI works for people, not against them.”

In December, it was revealed that a machine-learning programme used by the DWP to detect welfare fraud had shown bias according to factors like, age, disability and nationality. An internal assessment of the tool said that a “statistically significant outcome disparity” was present in its results, The Guardian reported.

However, the DWP says the ESA matching tool is has a low risk of bias as no personal details about the claimant are provided to the AI model, just the medical condition(s) they share.

The department’s risk assessment of the tool also addresses the possibility that its use makes any human agent’s decision less meaningful, saying: “To mitigate this, staff have received training to ensure they check the result, and do not simply accept the result provided by the solution.”

“When the claim comes through, it gives the agent some tasks on the bottom of the screen. One of these is to check the IRG code which refers to the health condition and medical evidence.”

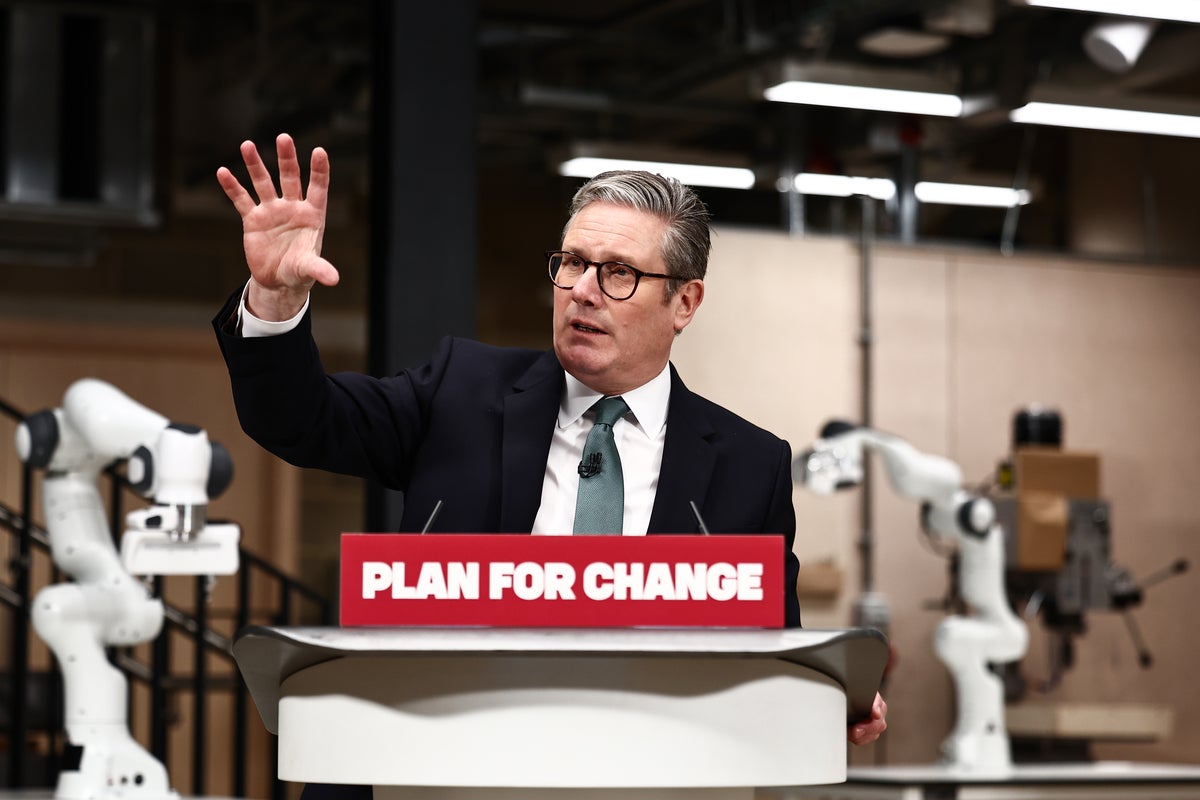

Details about the tool come after prime minister Keir Starmer outlined Labour’s plans to “turbocharge” the use of artificial intelligence in the UK in January. He said new technologies will “revolutionise” public services, including welfare, schools and health.

But the ESA matching tool is the first to be shared by the DWP on the algorithm transparency register, despite this being a requirement across all government departments for a year. The DWP has said that an inventory of all the AI tools in use at the department is held internally, but has resisted calls to publish this information.

Asked in September to share the list under the freedom of information act, the department responded: “Allowing public authorities like the DWP to choose how and when they publish information is of significant public interest. Managing the distribution of information is crucial for public affairs, and authorities should reasonably control this process. Thus, the Department is justified in organising its own publication methods.”