New analysis investigating X’s algorithm has revealed how the platform played a “central role” in spreading false narratives fuelling riots in the UK last summer.

Amnesty International’s analysis of the platform’s own source code, published in March 2023, has revealed how it “systematically prioritises” content that “sparks outrage” – without adequate safeguards to prevent harm.

The human rights group said the design of the software created “fertile ground for inflammatory racist narratives to thrive” in the wake of the Southport attack last year.

On 29 July 2024, three young girls – Alice da Silva Aguiar, Bebe King and Elsie Dot Stancombe – were murdered, and 10 others injured, by 17-year-old Axel Rudakubana at a Taylor Swift-themed dance class.

Before official accounts were shared by authorities, false statements and Islamophobic narratives began circulating on social media last summer, the report said.

The consequences of this misinformation resulted in weeks of racist riots which spread across the country, with a number of hotels housing asylum seekers targeted by the far-right.

Amnesty International said that in the critical window after the Southport attack, X’s algorithm system meant inflammatory posts went viral, even if they contained misinformation.

The report found no evidence that the algorithm assesses the post’s potential harm before boosting it based off of engagement, allowing misinformation to spread before efforts to share correct information was possible.

“These engagement-first design choices contributed to heightened risks amid a wave of anti-Muslim and anti migrant violence observed in several locations across the UK at the time, and which continues to present a serious human rights risk today,” the report read.

“As long as a tweet drives engagement, the algorithm appears to have no mechanism for assessing the potential for causing harm – at least not until enough users themselves report it.”

The report also highlights the bias towards “Premium” users on X, such as Andrew Tate, who posted a video falsely asserting the attacker was an “undocumented migrant” who “arrived on a boat”.

Tate had been previously been banned from Twitter for hate speech and harmful content, but their accounts were reinstated in late 2023 under Elon Musk’s “amnesty” for suspended users.

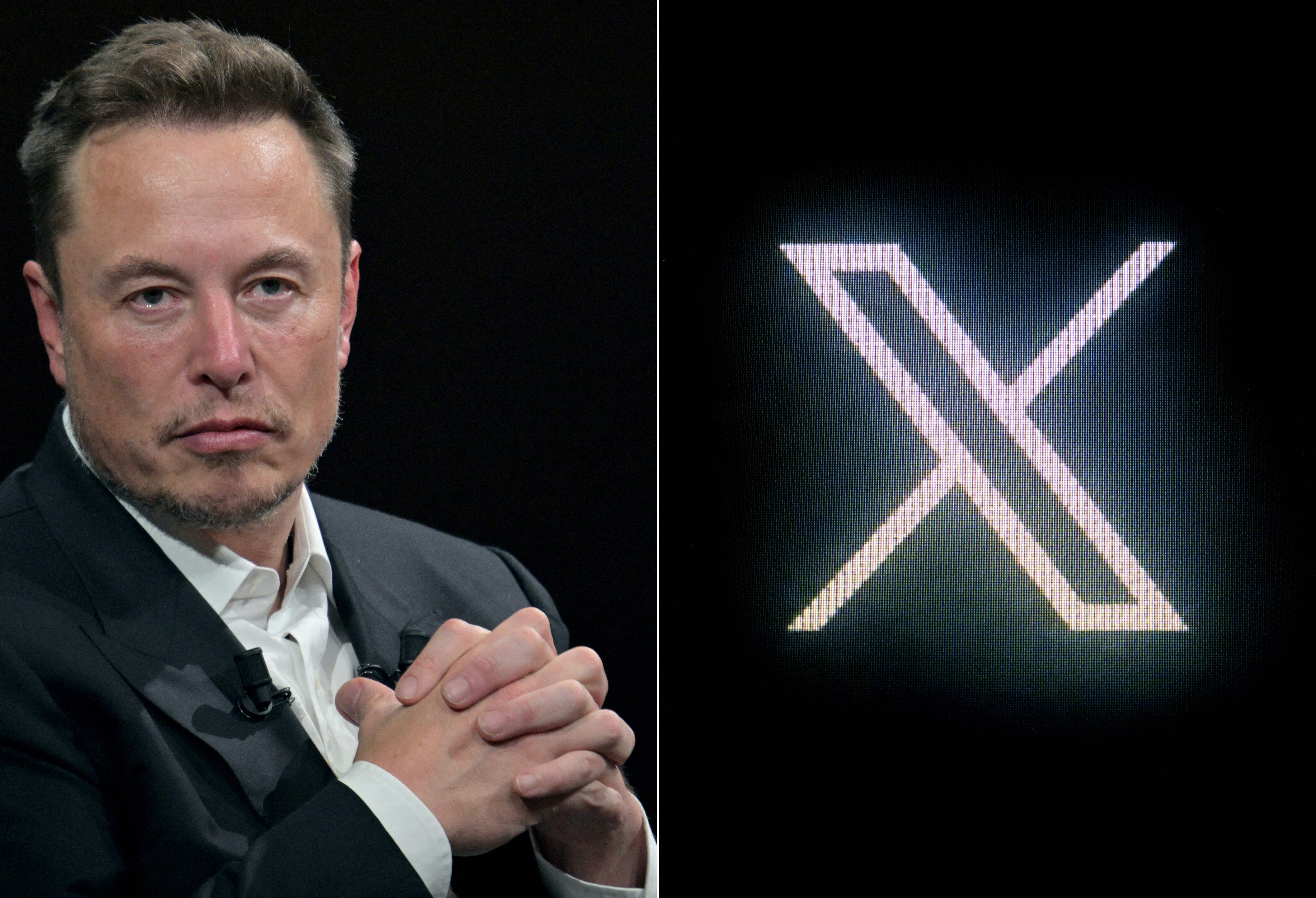

Since his takeover of the platform in 2022, Musk has also laid off its content moderation staff, disbanded Twitter’s Trust and Safety advisory council and fired trust and safety engineers.

Pat de Brún, Head of Big Tech Accountability at Amnesty International said: “Our analysis shows that X’s algorithmic design and policy choices contributed to heightened risks amid a wave of anti-Muslim and anti-migrant violence observed in several locations across the UK last year, and which continues to present a serious human rights risk today.

“Without effective safeguards, the likelihood increases that inflammatory or hostile posts will gain traction in periods of heightened social tension.”

An X spokesperson said: “We are committed to keeping X safe for all our users. Our safety teams use a combination of machine learning and human review to proactively take swift action against content and accounts that violate our rules, including our Violent Speech, Hateful Conduct and Synthetic and Manipulated Media policies, before they are able to impact the safety of our platform.

“Additionally our crowd-sourced fact checking feature Community Notes plays an important role in supporting the work of our safety teams to address potentially misleading posts across the X platform.”